Discover The Latest Tech

For

Creatives

Content Creators

Innovators

Vibes Maker

Health Guru

Biz Wizard

You

Discover the latest AI tools and innovative products designed to enhance your efficiency and creativity.

Segment Anything

Discovered by

Product Review

Segment Anything

Segment Anything

| 5 star | 0% | |

| 4 star | 0% | |

| 3 star | 0% | |

| 2 star | 0% | |

| 1 star | 0% |

Sorry, no reviews match your current selections

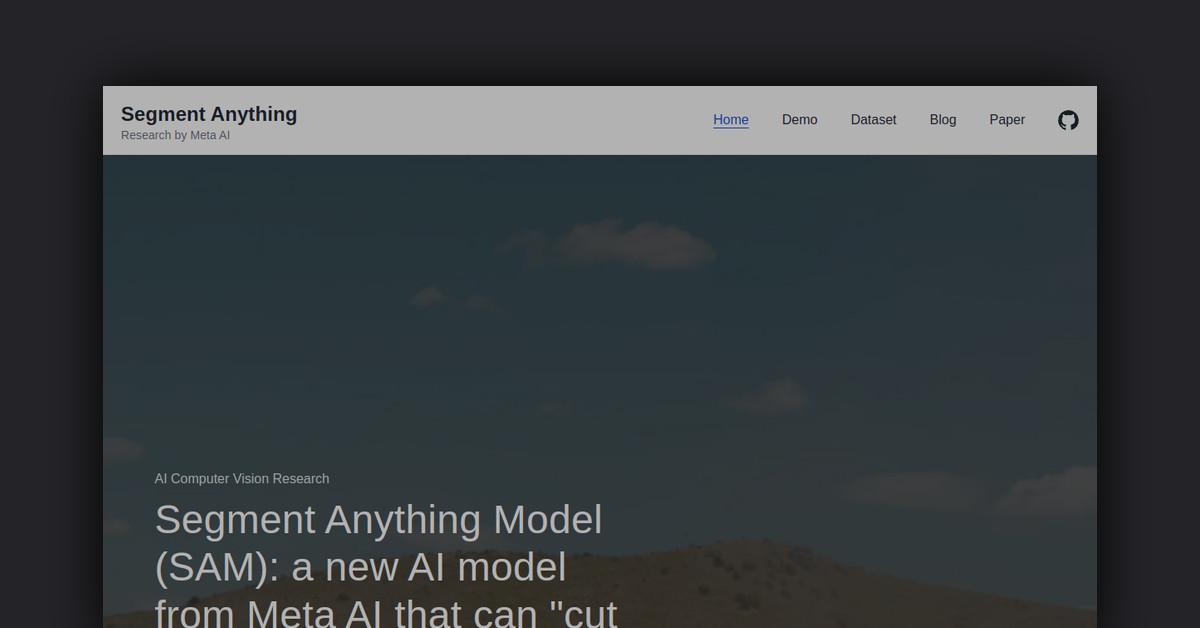

What is Segment Anything?

The Segment Anything Model (SAM) is an innovative AI solution developed by Meta AI that enables users to segment any object within an image with a single click. SAM operates as a promptable segmentation system, capable of generalizing to new objects and images without requiring additional training.

What Makes Segment Anything Unique?

SAM is engineered to comprehend objects in a broad context, allowing it to segment unfamiliar objects and images without the need for further training. It consists of two primary components:

- Image Encoder: This component encodes the entire image into a single vector embedding using a vision transformer model (ViT-H), requiring only one encoding per image.

- Mask Decoder: This lightweight decoder takes the image embedding and a prompt embedding to predict segmentation masks. Prompts can include points, boxes, scribbles, or even text descriptions of the desired segmentation. The mask decoder operates in real-time on a CPU.

Key Features

- Zero-shot Generalization: Segment objects and images that have never been encountered before without additional training.

- Interactive Prompts: Use point clicks or draw boxes/scribbles to indicate what to segment.

- Text Prompts: Specify objects to segment using text (e.g., “segment the dog”).

- Automatic Segmentation: Automatically segment all objects within an image.

- Ambiguity-aware: Provides multiple valid masks when prompts are ambiguous.

- Real-time Performance: The mask decoder operates in approximately 50ms on a CPU, facilitating real-time interactive segmentation.

- Extensible Outputs: The predicted masks can be utilized in various applications such as video tracking, 3D lifting, and image editing.

Pros & Cons Table

| Pros | Cons |

|---|---|

| Highly flexible and promptable interface | No official support available |

| Real-time performance on CPU | Limited to the capabilities of the underlying model |

| Zero-shot generalization capabilities | May require technical knowledge for integration |

Who is Using Segment Anything?

SAM is beneficial for a variety of users, including:

- Developers: Easily integrate segmentation features into applications with SAM’s flexible prompts and real-time capabilities.

- Researchers: Utilize SAM’s advanced few-shot segmentation abilities to explore new applications.

- Creative Professionals: Access sophisticated segmentation tools to enhance workflows in visual effects, graphic design, and more.

- Businesses: Streamline processes that involve extracting objects from images and videos.

Support Options

As an open-source model, SAM currently lacks official support. However, users can report issues and contribute to the GitHub repository: GitHub Repository. The Meta AI team actively engages in discussions about SAM on social media and community forums.

Pricing

SAM is available as a free open-source model on GitHub, with no usage limits.

Please note that pricing information may not be up to date. For the most accurate and current pricing details, refer to the official Segment Anything website.

Integrations and API

SAM’s open and flexible architecture allows for integration into various applications and workflows. Some explored integrations include:

- Python, PyTorch, ONNX

- Web integration via WebAssembly

- Adobe Photoshop plugins

- VR integration with Oculus headsets

SAM also provides an open-source Python API for inference, allowing users to load the model, encode images, generate prompt embeddings, and decode masks.

FAQ

- How was SAM trained? SAM was trained on a dataset comprising 11 million images with over 1 billion masks, collected using a model-in-the-loop “data engine.”

- What types of prompts can SAM accept? SAM supports point clicks, bounding boxes, scribbles, and text prompts.

- What is SAM’s inference speed? The image encoder takes approximately 150ms on a GPU, while the mask decoder takes around 50ms on a CPU.

- What platforms does SAM support? The image encoder utilizes PyTorch, and the mask decoder supports PyTorch, ONNX, and WebAssembly.

- What is the size of SAM? The image encoder consists of 632M parameters, while the mask decoder has 4M parameters.

Useful Links and Resources

Acrostic AI

Craft personalized acrostic poems in seconds with our user-friendly AI writing assistant

HYBRID RITUALS

Our daily rituals are constantly shifting with new technologies emerging.

How do we discover, embrace and use them to our best advantage?

Hybrid Rituals shares about the tools and innovations driving a new era of creativity and lifestyle — we cover everything from AI to immersive worlds, from music technology to 3D-printed fashion.

Discover possibilities that spark revolutionary work and redefine what's possible at the intersection of creativity, technology and efficiency.