Discover The Latest Tech

For

Creatives

Content Creators

Innovators

Vibes Maker

Health Guru

Biz Wizard

You

Discover the latest AI tools and innovative products designed to enhance your efficiency and creativity.

MatX

Discovered by

Product Review

MatX

MatX

| 5 star | 0% | |

| 4 star | 0% | |

| 3 star | 0% | |

| 2 star | 0% | |

| 1 star | 0% |

Sorry, no reviews match your current selections

Overview of MatX

In the fast-paced realm of artificial intelligence (AI), MatX stands out as an innovative leader, dedicated to expanding the potential of AI through its cutting-edge chips specifically engineered for Language Model Models (LLMs). With a clear mission to establish itself as the premier compute platform for Artificial General Intelligence (AGI), MatX aims to enhance AI performance while making it more efficient and cost-effective. This is accomplished by creating robust hardware tailored for LLMs, distinguishing MatX from conventional GPUs that serve a wider array of machine learning models. This specialized focus enables a more efficient integration of hardware and software, heralding a significant advancement in AI technology.

The MatX team comprises industry veterans with impressive expertise in ASIC design, compilers, and high-performance software development, including notable contributors like Reiner Pope and Mike Gunter, who have played pivotal roles in groundbreaking technologies at Google. This extensive experience positions MatX as a formidable contender in the AI landscape, bolstered by strong backing from leading LLM companies and substantial investments from top-tier organizations.

What is MatX?

MatX is a cutting-edge AI hardware provider specializing in high-performance chips designed specifically for Language Model Models (LLMs), aiming to enhance the capabilities of artificial intelligence.

What Makes MatX Unique?

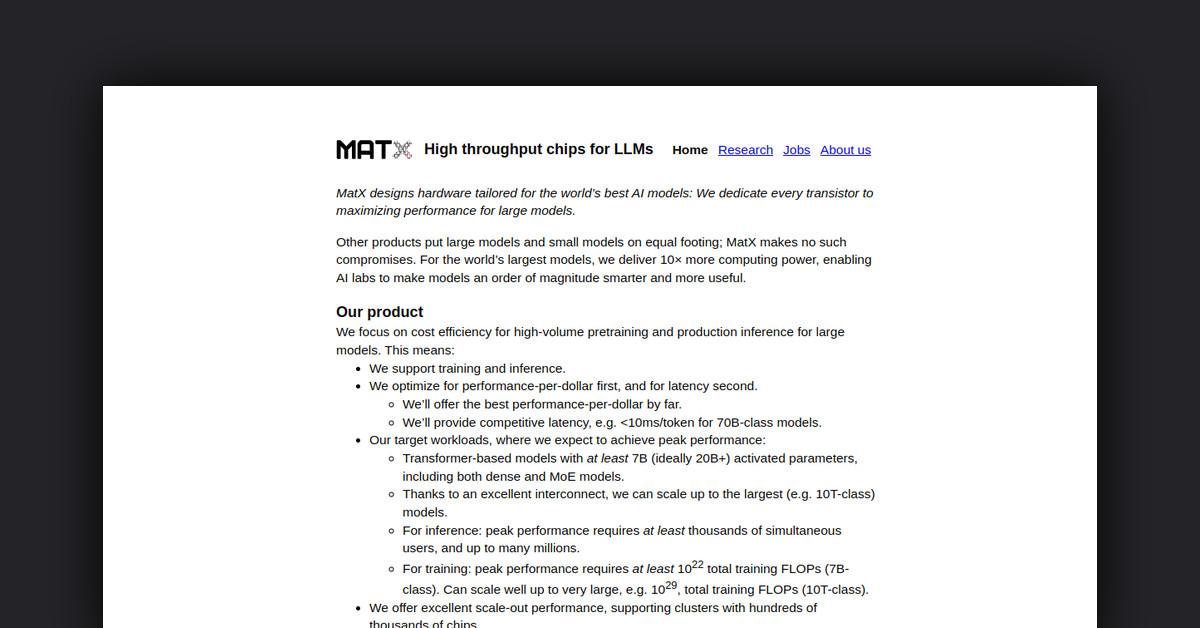

MatX differentiates itself in the AI hardware sector by delivering high-throughput chips meticulously crafted for LLMs, ensuring that every transistor is optimized for peak performance in large AI models. Unlike other solutions that treat models of varying sizes equally, MatX achieves a tenfold increase in computing power for the largest models, empowering AI labs to create significantly smarter and more effective models.

Key Features

- Unmatched Performance-Per-Dollar: MatX chips provide the best performance-to-cost ratio, drastically lowering the expenses associated with AI model training and inference.

- Competitive Latency: With latency under 10ms/token for 70B-class models, MatX guarantees rapid response times for AI applications.

- Optimal Scale-Out Performance: The hardware supports clusters with hundreds of thousands of chips, enabling large-scale AI initiatives.

- Low-Level Hardware Control: Expert users can access low-level control over the hardware for tailored optimizations.

Pros & Cons Table

| Pros | Cons |

|---|---|

| Exceptional performance-per-dollar ratio | May require specialized knowledge for optimal use |

| Low latency for large models | Limited to LLM applications |

| Scalable for extensive projects | Potentially high initial investment |

Who is Using MatX?

MatX serves a diverse clientele, including:

- AI Researchers: Individuals and teams striving to advance AI model capabilities.

- Startups: Emerging companies aiming to innovate in the AI sector with limited resources.

- Large Enterprises: Organizations engaged in large-scale AI projects that require substantial computational power.

Support Options

For inquiries and support, users can reach out to MatX directly through their official website for assistance and resources.

Pricing

Please note that pricing information may not be up to date. For the most accurate and current pricing details, refer to the official MatX website.

Integrations and API

MatX offers various integrations and APIs to facilitate seamless connectivity with existing systems and enhance functionality for users.

FAQ

- Is there a free trial available? For information on trials and demonstrations, it is recommended to contact MatX directly.

- What distinguishes MatX from traditional GPUs? MatX chips are specifically designed for LLMs, providing superior performance-per-dollar and efficiency for large AI models compared to GPUs that cater to a broader range of machine learning models.

Useful Links and Resources

For more information on MatX and to explore opportunities in hardware, software, and machine learning roles, visit the official website: https://matx.com/. This is your gateway to becoming part of the AI revolution with MatX, where detailed information on the technology, team, and mission is available.

TL;DR

MatX is redefining the AI hardware landscape with its specialized chips for LLMs. By prioritizing efficiency, performance, and cost-effectiveness, MatX is not only advancing the technological capabilities of AI models but also making them more accessible to startups and researchers. As the AI field continues to evolve, MatX is well-positioned to play a crucial role in shaping the future of artificial intelligence.

Acrostic AI

Craft personalized acrostic poems in seconds with our user-friendly AI writing assistant

HYBRID RITUALS

Our daily rituals are constantly shifting with new technologies emerging.

How do we discover, embrace and use them to our best advantage?

Hybrid Rituals shares about the tools and innovations driving a new era of creativity and lifestyle — we cover everything from AI to immersive worlds, from music technology to 3D-printed fashion.

Discover possibilities that spark revolutionary work and redefine what's possible at the intersection of creativity, technology and efficiency.